SeBS, the serverless benchmark suite

Understanding performance challenges in the serverless world.

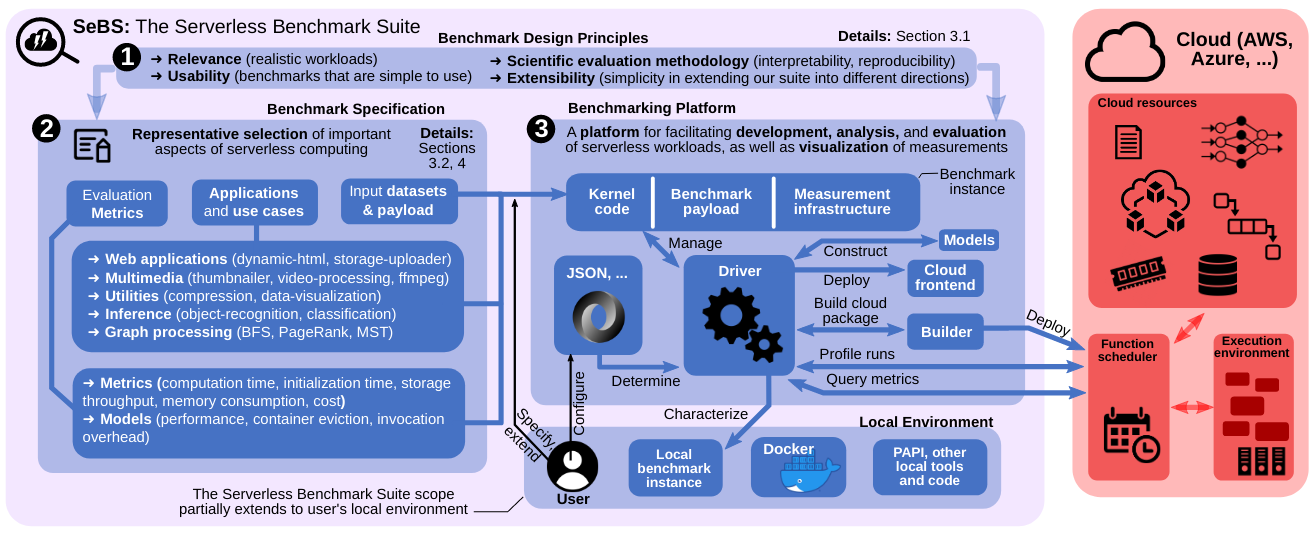

Serverless computing gained significant traction in industry and academia and allowed developers to build scalable and elastic applications. Nevertheless, many authors raised several important issues, including unpredictable and reduced performance, and the black-box nature of commercial systems made it difficult to gain insights into the limitations and performance challenges of FaaS systems. But the new field was lacking an automatic, representative, and easy-to-use benchmarking platform. Therefore, we built SeBS, a serverless benchmarking suite, to fill that gap and provide clear and fair baselines for the comparative evaluation of serverless providers.

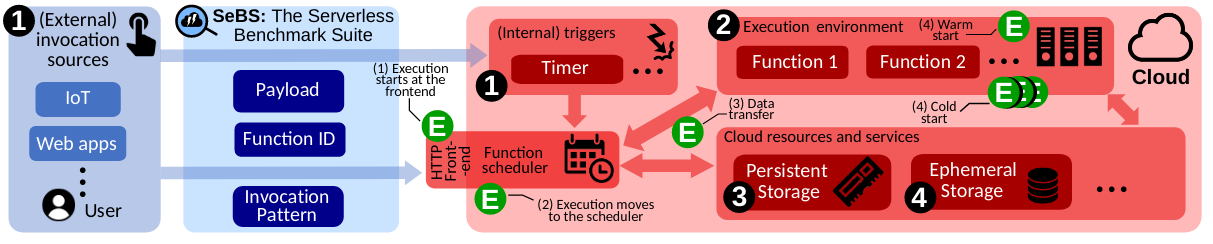

In our work, we consider an abstract and high-level view of the serverless platform that allows us to design a benchmarking suite that is independent of a specific cloud provider. With a clear decomposition of the system, we can design experiments measuring the performance and overheads of different components of the FaaS platform.

While prior research work in the field included serverless benchmark suites, often the benchmarks included only functions with an input, without any automatization of the deployment to the cloud. Therefore, we build SeBS with a fully automatic framework for building, deploying, and invoking functions to guide users towards reproducible results. Users must only select the platform and provide credentials, and all necessary cloud resources are created automatically. The experiments are conducted automatically, and the results are parsed into a data frame format for easy analysis and plotting.

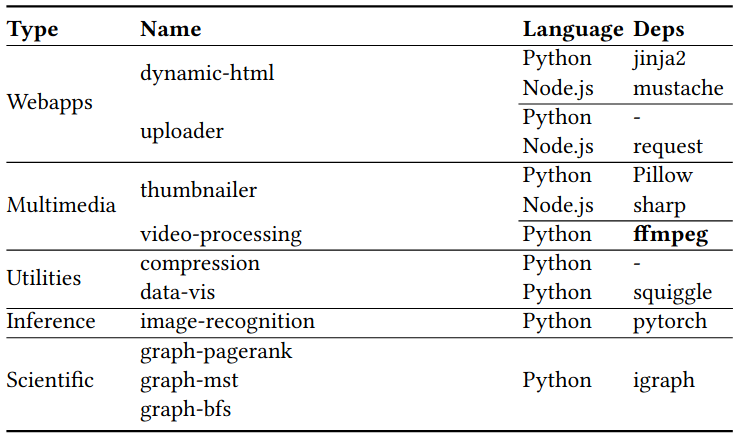

Our benchmark suite includes multiple functions to cover the broad spectrum of serverless applications, from lightweight utilities and website backends, up to computationally intensive multimedia and machine learning functions. The functions are implemented in a platform-agnostic way, and we deploy small and light-weight shims to adapt function and storage interfaces to the selected FaaS system. The platform is modular, and thanks to the abstract system view, it can be easily extended to support new serverless platforms.

Our work resulted in multiple novel findings into the performance, reliability, and portability of serverless platforms. In addition to extensive performance evaluation of different workloads, we identified portability issues between providers, analyzed the cold startup cost and overhead, and we quantified the performance cost of application “FaaSification” with a comparison against an IaaS deployment.

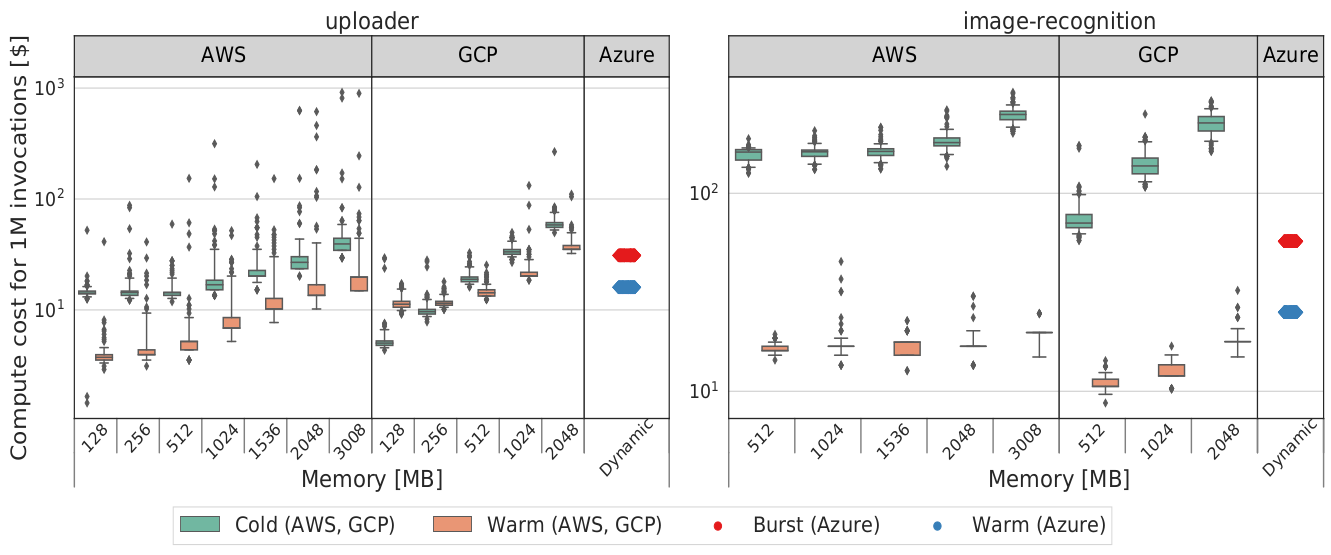

We conducted a detailed cost analysis of functions, showing essential cost differences between cloud providers and significant underutilization of provided resources. These results confirm prior findings from non-serverless applications that CPU and memory consumption are rarely correlated. The current price systems lead to resource waste, as computationally-intensive functions must allocate large amounts of unused memory to obtain sufficient CPU power. The overallocation limits the number of active sandboxes on a server and negatively affects the utilization boost provided by FaaS platforms.

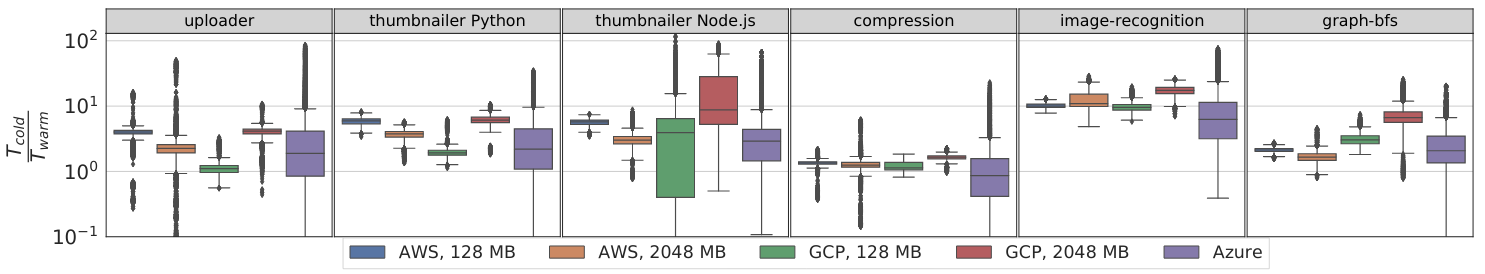

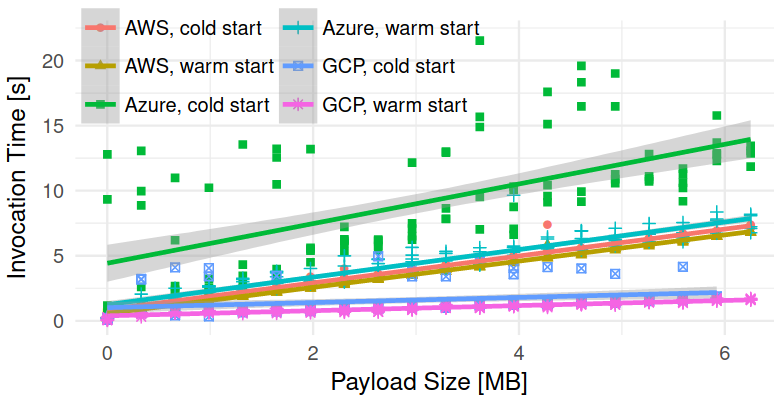

Because of the black-box nature of serverless systems, it isn’t easy to measure the invocation overheads accurately; after all, we don’t know how much time passes between the arrival of the request at the cloud trigger system and the beginning of an invocation. Some benchmarks approximate this by measuring the difference between the function execution time reported by the cloud provider and the total execution time observed by the client. To find the most accurate estimation, we estimate the clock drift between function execution sandbox and client. With that information, we can directly compare timestamps obtained on the client and the function server. After subtracting connection setup overheads provided by curl, we provide an accurate estimation of the invocation overhead. The results show that while warm starts increase linearly with the payload size, as expected, the cold starts on some of the platforms indicate significant bottlenecks and nondeterminism.

More insights and results can be found in our benchmarking paper that has been accepted at Middleware 2021. The open-source release of SeBS is available on GitHub - try it today! It supports AWS Lambda, Azure Functions, Google Cloud Functions, and release 1.1 added support for OpenWhisk.