perf-taint

Enhancing performance modeling with program analysis information.

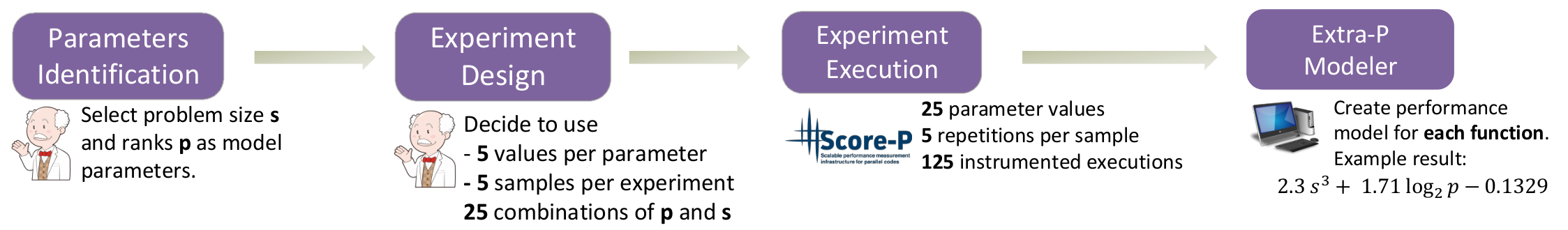

Performance modeling has had a huge impact on the world of scientific and high-performance computing. It gave us tools to discover scalability bugs, validate performance expectations, and design new systems. In particular, empirical modeling tools made it much easier for users to derive parametric performance models. After the long modeling process, users obtain parametric models estimating the behavior of each function in the program.

Unfortunately, those tools are not a perfect solution - they are not always easy to use, not each model is perfect, and modeling is expensive: its cost increases exponentially with the number of parameters. We identified five major issues:

(1) users can usually afford to create models with 2-3 parameters, and selection of important program parameters is far from trivial;

(2) experiment design process is manual and can be difficult as well;

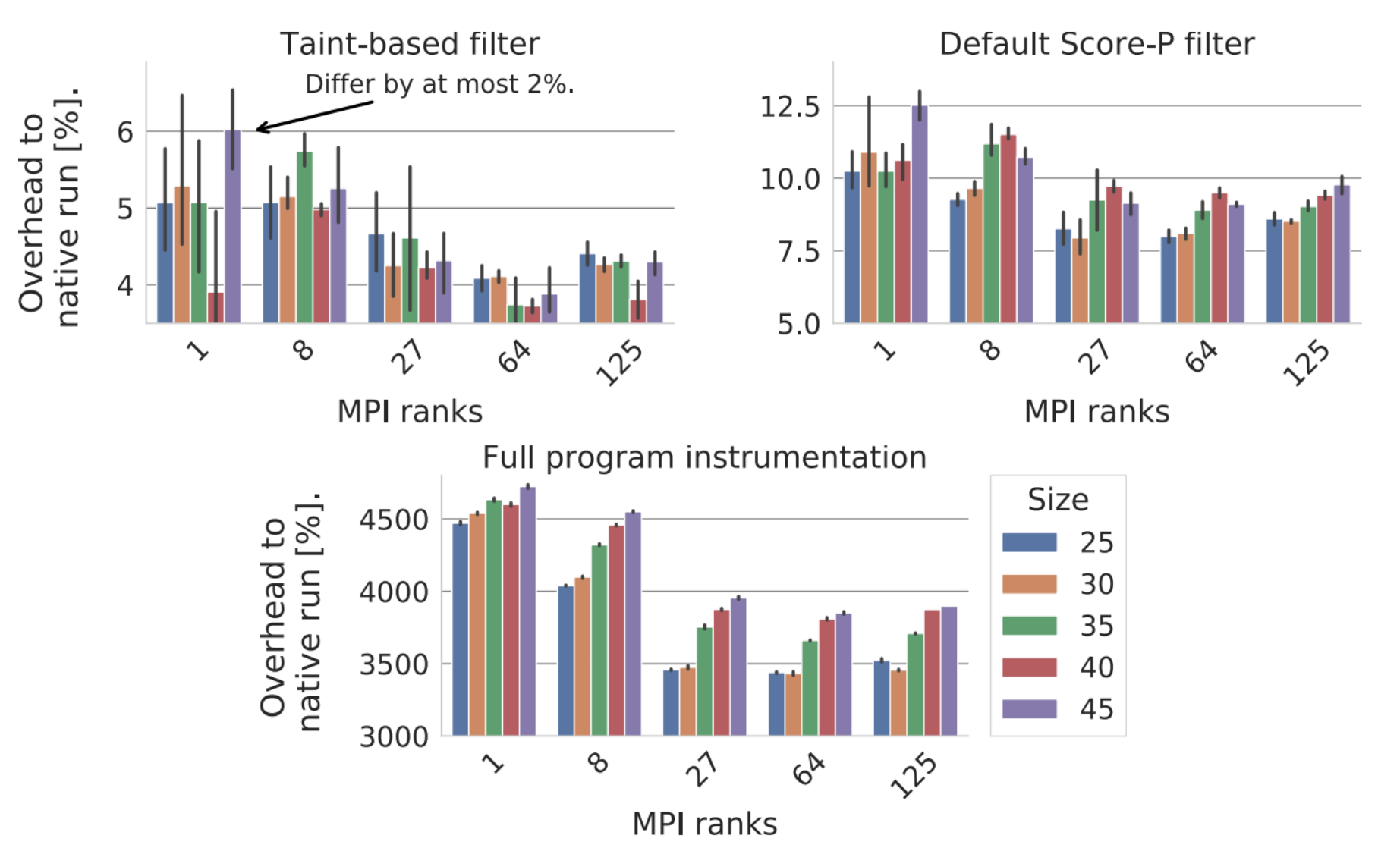

(3) instrumenting all functions adds significant overhead and affects the quality of results;

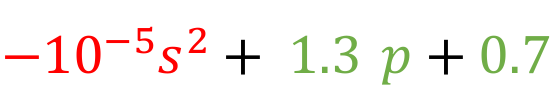

(4) function models can present false-positive dependencies due to noise and overfitting, particularly on small and short running functions (see example below);

(5) empirical models can represent effects other than those resulting from the program itself, such as hardware effects.

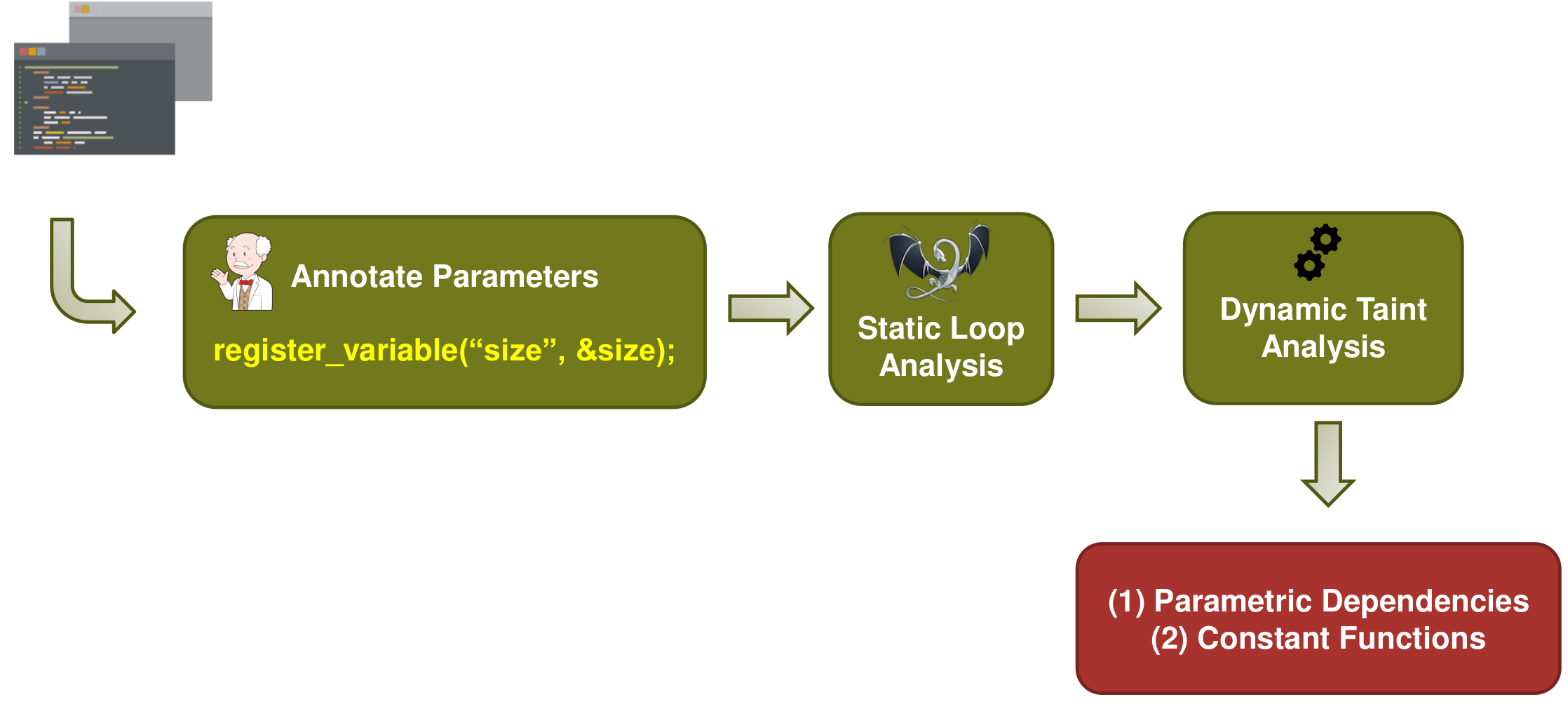

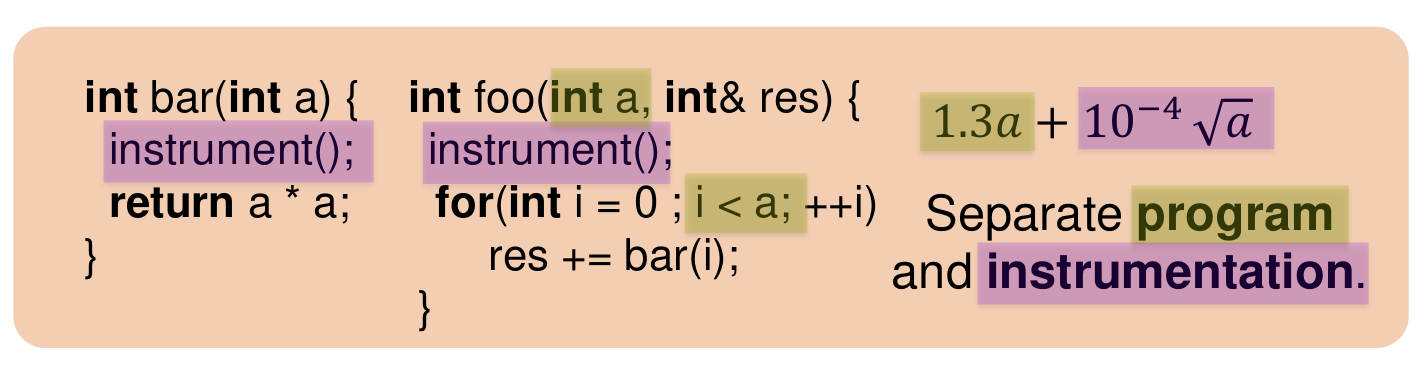

Our work shows that these issues can be mitigated by turning the black-box empirical modeling process into a white-box one. We include in the modeling process a program analysis step to achieve two goals: identify functions which performance is constant with respect to input parameters, and detect which parameters affect the function’s computational effort. In addition, the analysis must be memory agnostic and inter-procedural, and it must be possible to integrate it into distributed MPI applications.

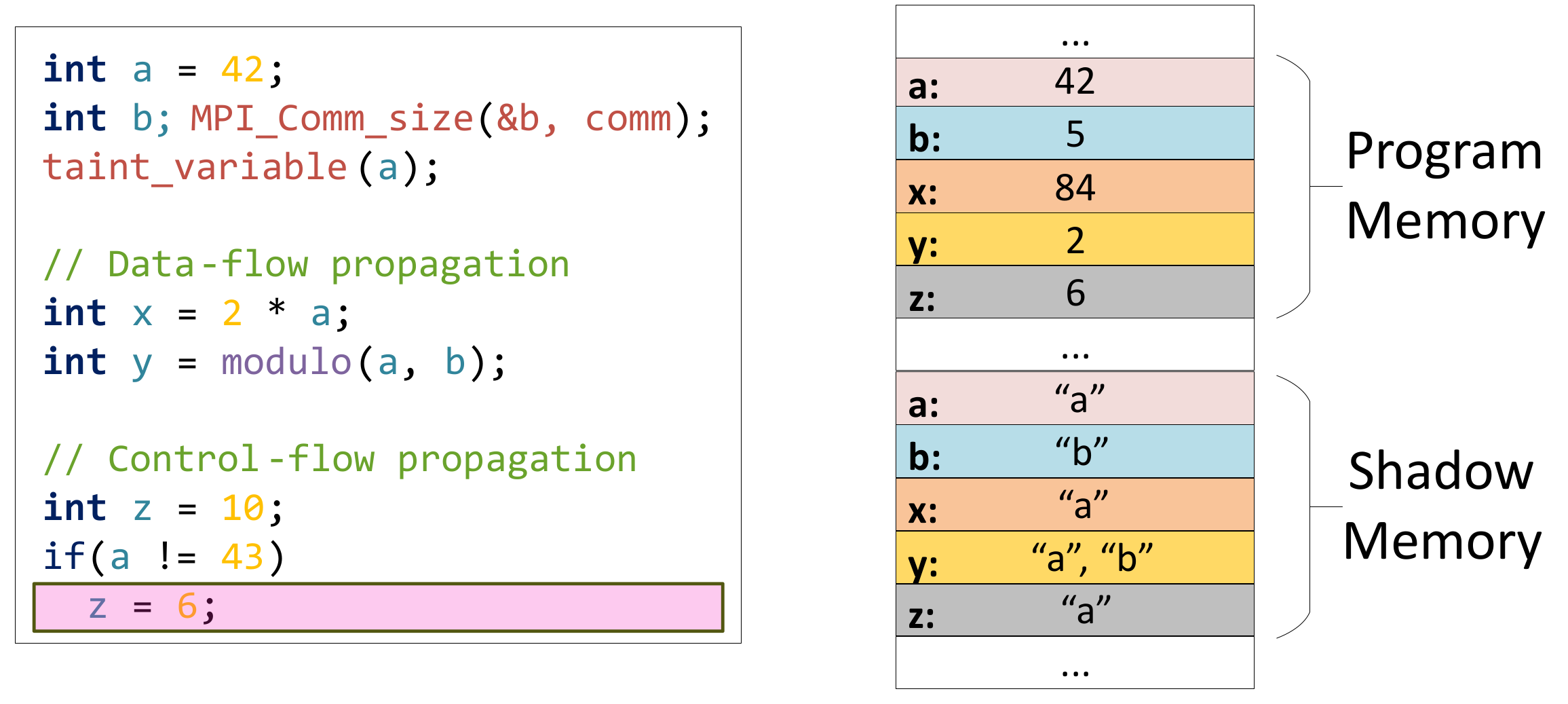

We selected dynamic taint analysis, a computer security technique used to analyze the dependency of program code on potentially malicious user input. The analysis allows us to annotate program input parameters as a source of taint labels, and the compiler instrumentation introduces propagation of taint labels across data flow and control flow. Furthermore, the user memory region is replicated in the so-called shadow memory to keep track of the labels associated with each program variable. In the end, we can inspect performance-critical code regions, such as loop conditions and determine which program parameters affected their value.

perf-taint extracts the vital program information to simplify the modeling process. The parameter dependency is used to:

(1) help users select modeling parameters;

(2) simplify modeling experiments;

(3) removing constant functions from instrumentation and modeling;

(4) removing models with false dependencies;

(5) detecting when empirical models differ substantially from the program and can include other effects.

We evaluated perf-taint on two HPC benchmarks and showed that perf-taint makes the performance modeling easier, cheaper, and more robust. In HPC applications, perf-taint helps primarily by identifying that over 85% of functions are constant.

Our work has been recognized at the ACM Student Research Competition at Supercomputing 19, where the poster won first place. In addition, the full research paper has been presented at ACM SIGPLAN PPoPP 2021.