Single-source GPU programming

Incorporating Khronos SYCL into HPX.

In the last years, the HPC world has moved to more heterogeneous architectures, exploiting hardware offering different types of parallelism and enforcing specific programming models. It has become a standard practice to offload computations to dedicated accelerators, and it is expected that the importance of massively parallel processors will increase. However, up to now, we have not seen a standardized C++ approach that would allow for writing portable code for heterogeneous systems.

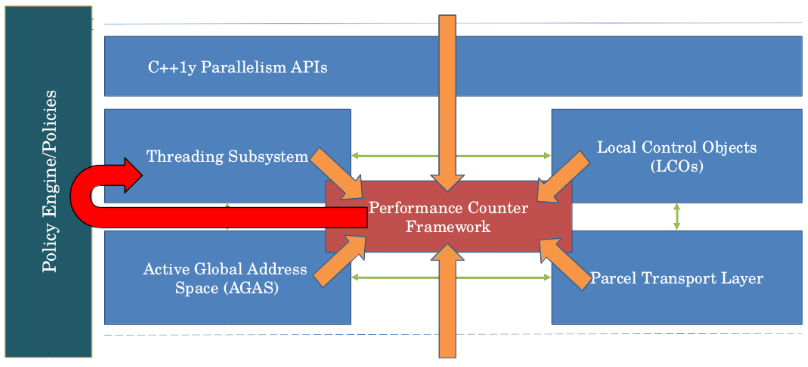

HPX is a parallel runtime system for applications of any scale, exposing parallelism API conforming to the newest developments in the C++ standards. However, it lacks the integration of computing accelerators.

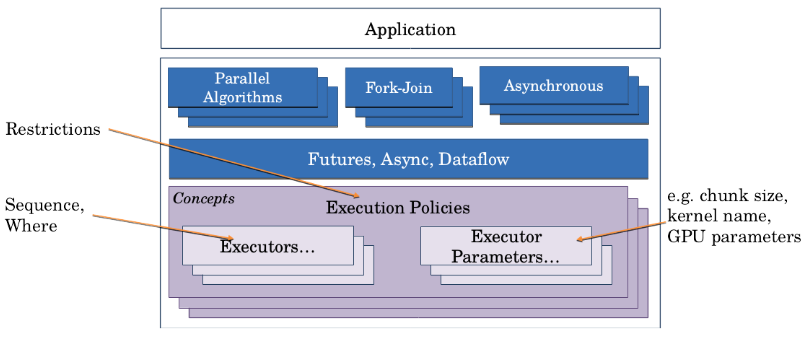

HPX.Compute is an attempt to solve the heterogeneous programming problem using standard C++ language and libraries. The system is designed on top of three concepts: allocators responsible for memory management, target providing an opaque view of a device, and executor responsible for scheduling computations on a target. The design is orthogonal to compilers, libraries, and other vendor-specific changes.

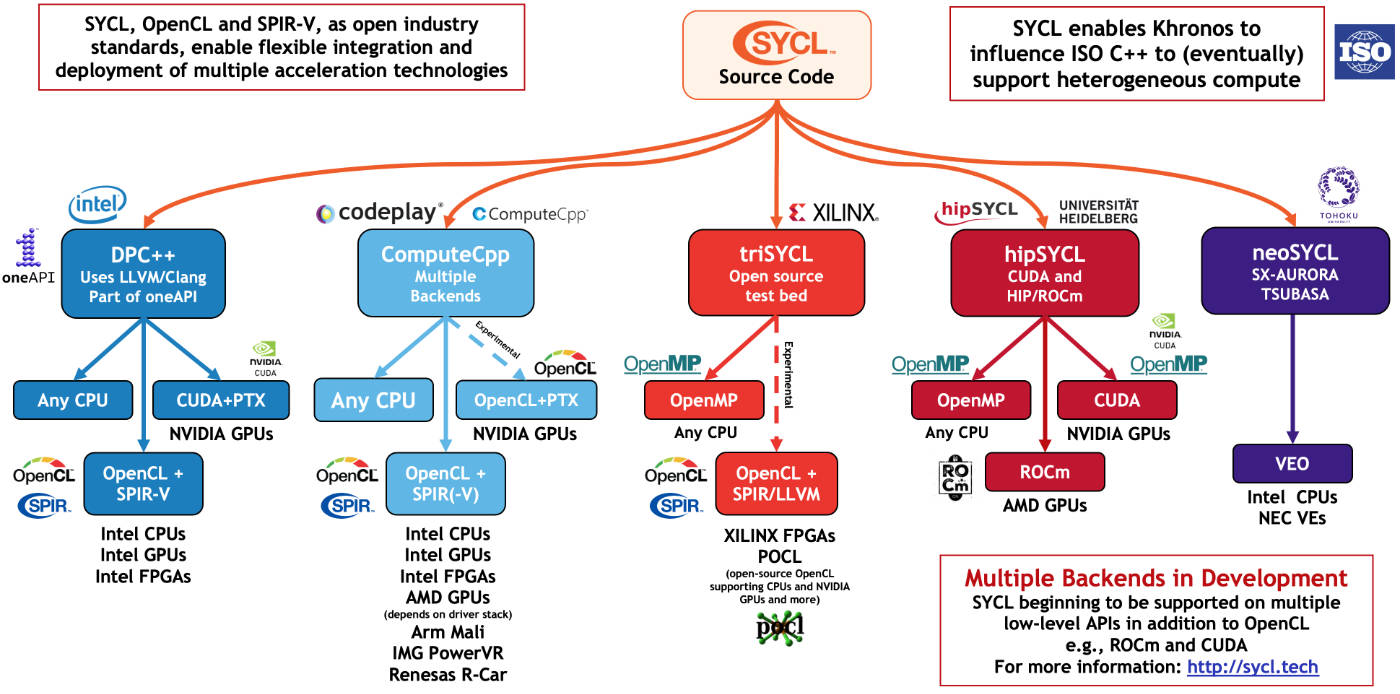

We implemented the HPX.Compute model on top of Khronos SYCL, the new standard for single-source GPU programming. SYCL is built on top of OpenCL semantics, and it brings simplified memory management and support for C++ kernels while providing portability guarantees similar to OpenCL. The SYCL compiler accepts a C++ source file, looks for SYCL kernels and compiles them to SPIR-V bitcode. Later, the same source code is compiled with a regular C++ compiler, and the SYCL bitcode is linked with it. We used ComputeCpp, which at the time was the only SYCL compiler and framework with a complete implementation.

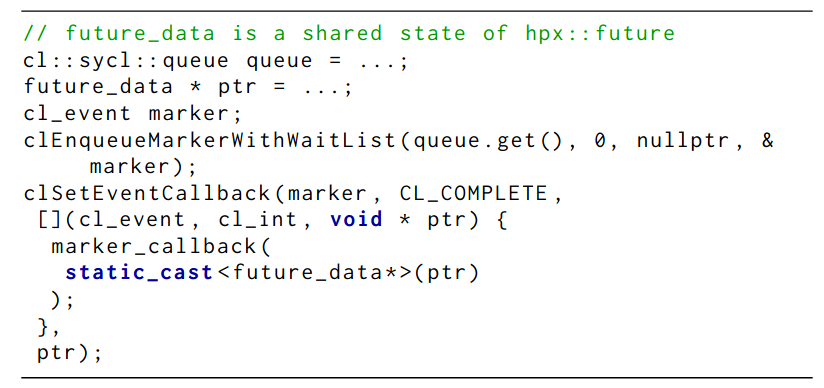

We implemented allocators using SYCL buffers, targets encapsulating SYCL device, and HPX executors scheduling the parallel for_each algorithm on SYCL targets. To implement asynchronous programming with futures, we needed a way to receive a callback from SYCL that the kernel execution has finished, and we can change the state of the promise tied with a future object returned to the user. Unfortunately, SYCL does not have a native interface for callbacks. However, it does have rich cooperation with OpenCL, and we were able to access and use the underlying OpenCL system. We implemented the futures without any hacks, and the compiler does the heavy lifting of compiling GPU code for us!

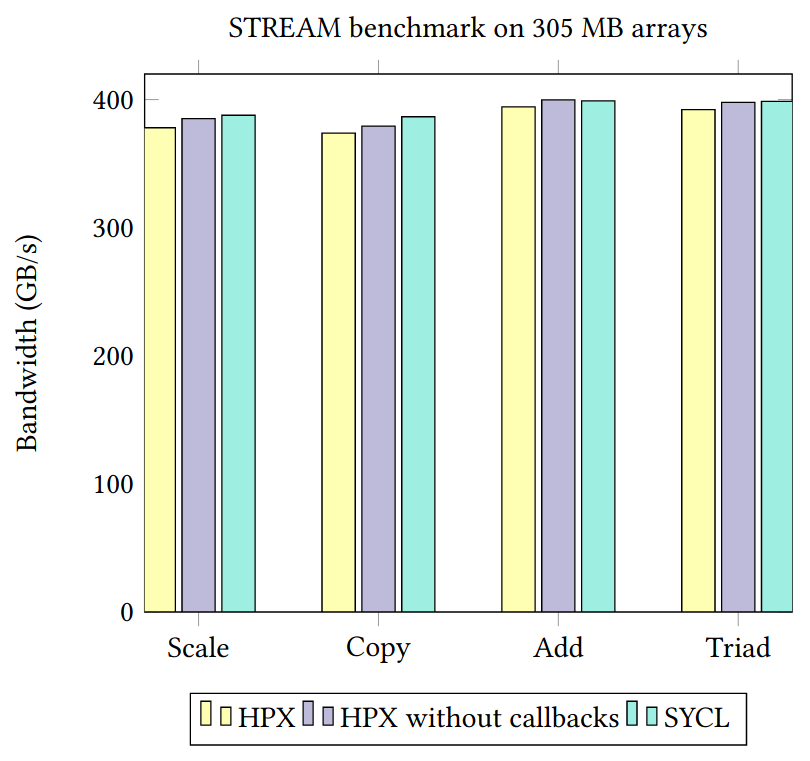

Using the STREAM benchmark, we have shown that SYCL kernels can be integrated with HPX with minimal performance overheads, including the asynchronous executions with hpx::future. Furthermore, our implementation provides high-performance GPU computations with a native C++ interface similar to CPU programming.

This work is a result of my internship at the STE||AR Group at the Louisiana State University in summer 2016. During that time, I worked on C++AMP, AMD HC, and Khronos SYCL as potential implementation backends for GPU programming in HPX. We presented our paper at the DHPCC++ 2017 at the 9th International Workshop on OpenCL and SYCL.